Generic HTTPS configuration

With event streaming, you can configure Strivacity to send audit logs and account events to any HTTPS endpoint. This gives you the flexibility to forward events to your own infrastructure, including services that don't accept direct internet traffic. A common use case is pushing Strivacity logs to AWS, where they can be stored in S3 and consumed by multiple downstream systems.

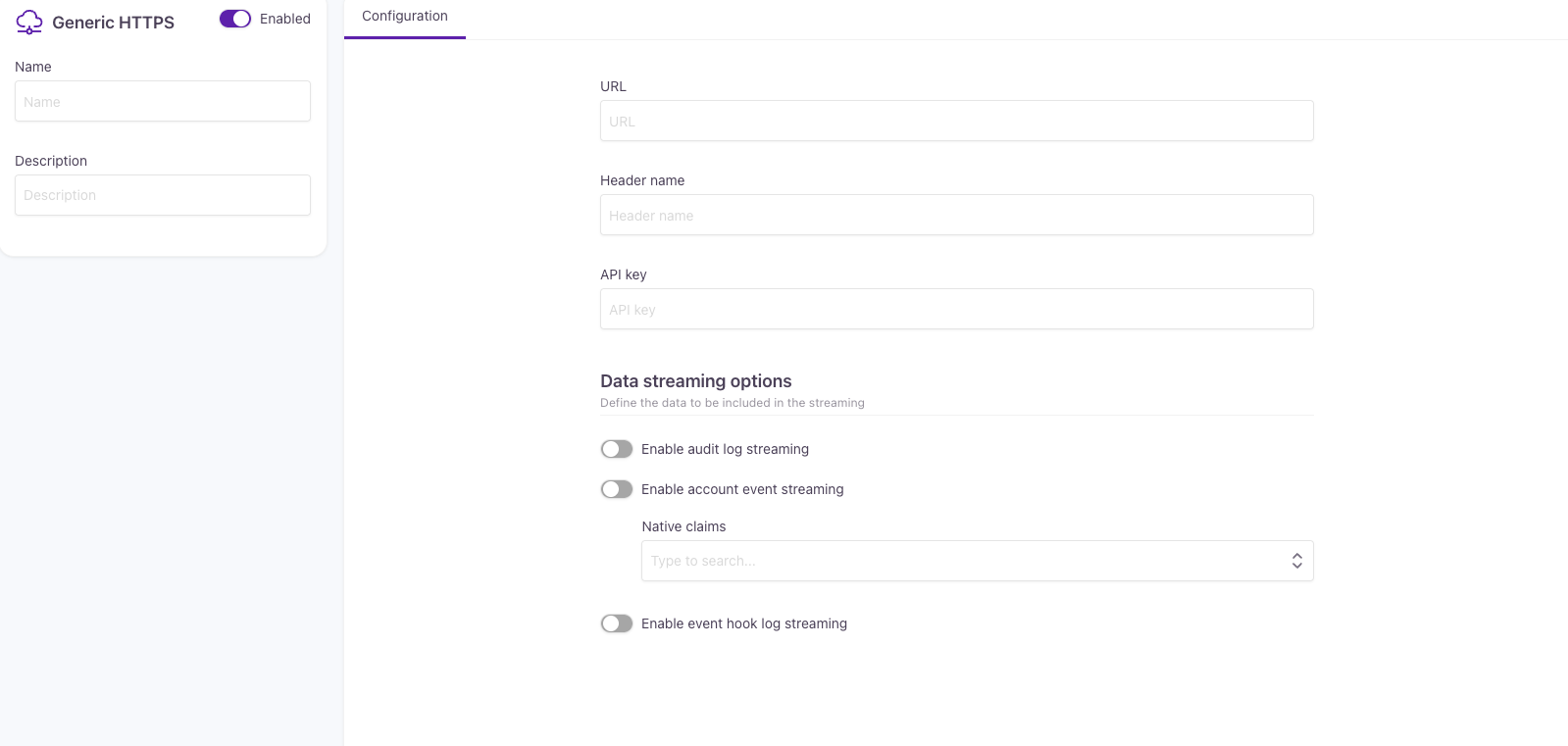

Configuring generic HTTPS event streaming

When creating a generic HTTPS configuration, you will be asked for the following information:

| Field | Description |

|---|---|

| Name | A name for your generic HTTPS configuration. |

| Description | A brief description. |

| URL | The endpoint URL where Strivacity will send events. |

| Header name | The HTTP header used for authentication. |

| API key | The value of the authentication key. |

Data streaming options

- Enable audit log streaming: Sends the entire audit log to the configured HTTPS endpoint. Certain policy changes (for example, Branding policy, Notification policy, and Lifecycle event hooks) may appear with empty request and response fields.

- Enable account event streaming: Sends customer-centric account events (for example, login attempts, password changes).

- Limited to the basic information available in the header of an account event. This means that only high-level details are forwarded, such as the customer’s identity, the timestamp of the action, and labels indicating success or failure. The detailed steps (for example, identification started, MFA selection started) are not included by default.

- If additional account information is required, you can enable native claims. These native claims are selected via a UI field that lists all native claims available in your instance and included in the event payload when enabled.

- Enable event hook streaming: Sends logs generated by Lifecycle event hooks as part of account events. This option provides visibility into hook execution without accessing hook code directly.

AWS S3 setup example

A common use case for the Generic HTTPS vendor is streaming events into AWS S3. This allows you to persist raw data for analytics, compliance, or downstream processing. To achieve this, Strivacity sends events to an API Gateway endpoint. From there, Lambda, Kinesis, and Firehose deliver the events into S3.

The typical flow is:

Strivacity → API Gateway → Lambda → Kinesis Data Stream → Kinesis Firehose → S3 Bucket.

Prerequisites

- Access to the AWS Management Console.

- IAM permissions to create and configure S3, Kinesis, Lambda, and API Gateway resources.

Detailed steps

-

Create an S3 bucket to store your Strivacity events.

- In the AWS Management Console, open S3.

- Select Create bucket.

- Bucket name: choose a unique name, for example,

strivacity-events-bucket. - Keep default settings for Block Public Access (recommended to keep it enabled).

- Bucket name: choose a unique name, for example,

-

Set up a Kinesis Data Stream: This stream will be the central hub for your incoming data.

- In the console, open Kinesis.

- Select Create data stream.

- Data stream name: for example,

strivacity-events-data-stream. - Capacity mode: choose On-demand (auto-scaling).

- Data stream name: for example,

- Wait until the stream status becomes Active.

-

Create a Firehose Delivery Stream:Firehose will consume data from your Kinesis Data Stream, transform and deliver it to your raw data S3 bucket.

- In Kinesis Firehose, create a new delivery stream:

- Name: for example,

strivacity-events-firehose. - Source: Amazon Kinesis Data Streams.

- Kinesis stream: select

strivacity-events-data-stream.

- Kinesis stream: select

- Destination: Amazon S3.

- S3 bucket: choose

strivacity-events-bucket.

- S3 bucket: choose

- IAM role:

- AWS will automatically create a role (read from Kinesis, write to CloudWatch Logs).

- Add permission to write AWS S3 bucket.

- Name: for example,

- In Kinesis Firehose, create a new delivery stream:

-

Configure API Gateway: API Gateway will receive incoming HTTP requests and push them to your Kinesis Data Stream.

- In API Gateway, create a new REST API.

- Create a resource, for example,

/logs. - Add a POST method.

- Integration action: PutRecord (to your Kinesis stream).

- Deploy the resource to a stage (for example,

dev). - Secure the endpoint with an API key:

- API Gateway > API keys > Create API key.

- Create a Usage plan > link it to your API stage > attach the API key.

- Mark POST

/logsmethod: Method Request > API Key Required = true.

- Re-deploy the API.

-

Deploy a Lambda function: This Lambda function will process records from the Kinesis Data Stream.

-

In Lambda, create a function:

- Function name: for example,

process-http-data. - Runtime: Choose Python (or Node.js, or Java).

- Function name: for example,

-

Attach an inline IAM policy with

kinesis:PutRecord. -

Example code:

import json import boto3 kinesis_client = boto3.client('kinesis') STREAM_NAME = 'strivacity-events-data-stream' def lambda_handler(event, context): print("Received event:", json.dumps(event)) try: if 'body' in event: payload = json.loads(event['body']) else: payload = event data_str = json.dumps(payload) response = kinesis_client.put_record( StreamName=STREAM_NAME, Data=data_str, PartitionKey='partitionKey' ) print("Successfully put to Kinesis:", response) return { 'statusCode': 200, 'body': json.dumps({ 'message': 'Record sent', 'sequenceNumber': response['SequenceNumber'] }) } except Exception as e: print("Error putting record to Kinesis:", str(e)) return { 'statusCode': 500, 'body': json.dumps({ 'message': 'Error putting record to Kinesis', 'error': str(e) }) }

-

-

Test the setup

Use curl to verify that your setup works:curl -X POST \ -H "Content-Type: application/json" \ -H "x-api-key: YOUR_API_KEY" \ -d '{"log":"My test log"}' \ https://YOUR-API-ID.execute-api.YOUR-REGION.amazonaws.com/dev/logsExpected result:

- A 200 OK response.

- The record stored in your S3 bucket (

strivacity-events-bucket).

Updated 5 months ago